ZFS

- write "raidz and draid" section

This is a general usage article for ZFS in Gentoo, those looking to install Gentoo with a ZFS rootfs may prefer to start with ZFS/rootfs.

ZFS is a next generation filesystem created by Matthew Ahrens and Jeff Bonwick. It was designed around a few key ideas:

- Administration of storage should be simple.

- Redundancy should be handled by the filesystem.

- File-systems should never be taken offline for repair.

- Automated simulations of worst case scenarios before shipping code is important.

- Data integrity is paramount.

Development of ZFS started in 2001 at Sun Microsystems. It was released under the CDDL in 2005 as part of OpenSolaris. Pawel Jakub Dawidek ported ZFS to FreeBSD in 2007. Brian Behlendorf at LLNL started the ZFSOnLinux project in 2008 to port ZFS to Linux for High Performance Computing. Oracle purchased Sun Microsystems in 2010 and discontinued OpenSolaris later that year.

The Illumos project started to replace OpenSolaris and roughly 2/3 of the core ZFS team resigned, including Matthew Ahrens and Jeff Bonwick. Many took jobs at companies which continue to develop OpenZFS, initially as part of the Illumos project. The 1/3 of the ZFS core team at Oracle that did not resign continue development of an incompatible proprietary branch of ZFS in Oracle Solaris.

The first release of Solaris included a few innovative changes that were under development prior to the mass resignation. Subsequent releases of Solaris have included fewer and less ambitious changes. Today, a growing community continues development of OpenZFS across multiple platforms, including FreeBSD, Illumos, Linux and Mac OS X.

Gentoo's ZFS maintainers discourage users from using ZFS (or any other exotic filesystem) on PORTAGE_TMPDIR because Portage typically reveals bugs. But as of May 2022, it should be fine on ZFS.

Features

A detailed list of features can be found in a separate article.

Installation

Modules

There are out-of-tree Linux kernel modules available from the ZFSOnLinux Project.

Since version 0.6.1, ZFS is considered "ready for wide scale deployment on everything from desktops to super computers" stable for wide scale deployment, by the OpenZFS Project.

All changes to the git repository are subject to regression tests by LLNL.

USE flags

USE flags for sys-fs/zfs Userland utilities for ZFS Linux kernel module

+rootfs

|

Enable dependencies required for booting off a pool containing a rootfs |

custom-cflags

|

Build with user-specified CFLAGS (unsupported) |

debug

|

Enable extra debug codepaths, like asserts and extra output. If you want to get meaningful backtraces see https://wiki.gentoo.org/wiki/Project:Quality_Assurance/Backtraces |

dist-kernel

|

Enable subslot rebuilds on Distribution Kernel upgrades |

kernel-builtin

|

Disable dependency on sys-fs/zfs-kmod under the assumption that ZFS is part of the kernel source tree |

minimal

|

Don't install python scripts (arcstat, dbufstat etc) and avoid dependency on dev-lang/python |

nls

|

Add Native Language Support (using gettext - GNU locale utilities) |

pam

|

Install zfs_key pam module, for automatically loading zfs encryption keys for home datasets |

python

|

Add optional support/bindings for the Python language |

selinux

|

!!internal use only!! Security Enhanced Linux support, this must be set by the selinux profile or breakage will occur |

split-usr

|

Enable behavior to support maintaining /bin, /lib*, /sbin and /usr/sbin separately from /usr/bin and /usr/lib* |

test-suite

|

Install regression test suite |

unwind

|

Add support for call stack unwinding and function name resolution |

verify-sig

|

Verify upstream signatures on distfiles |

Emerge

To install ZFS, run:

root #emerge --ask sys-fs/zfsRemerge sys-fs/zfs-kmod after every kernel compile, even if the kernel changes are trivial. When recompiling the kernel after merging the kernel modules, users may encounter problems with zpool entering uninterruptible sleep (unkillable process) or crashing on execute. Alternatively, set USE=dist-kernel with the Distribution Kernel.

root #emerge -va @module-rebuildZFS Event Daemon Notifications

The ZED (ZFS Event Daemon) monitors events generated by the ZFS kernel module. When a zevent (ZFS Event) is posted, the ZED will run any ZEDLETs (ZFS Event Daemon Linkage for Executable Tasks) that have been enabled for the corresponding zevent class.

/etc/zfs/zed.d/zed.rc##

# Email address of the zpool administrator for receipt of notifications;

# multiple addresses are delimited by whitespace.

# Email will only be sent if ZED_EMAIL_ADDR is defined.

# Enabled by default; comment to disable.

#

ZED_EMAIL_ADDR="admin@example.com"

##

# Notification verbosity.

# If set to 0, suppress notification if the pool is healthy.

# If set to 1, send notification regardless of pool health.

#

ZED_NOTIFY_VERBOSE=1

OpenRC

Add the zfs scripts to runlevels for initialization at boot:

root #rc-update add zfs-import boot

root #rc-update add zfs-mount boot

root #rc-update add zfs-load-key boot

root #rc-update add zfs-share default

root #rc-update add zfs-zed default

Only the first two are necessary for most setups. zfs-load-key is required for zfs encryption. zfs-share is for people using NFS shares while zfs-zed is for the ZFS Event Daemon that handles disk replacement via hotspares and email notification of failures.

To use ZFS as root file system, as well as ZFS swap, add zfs-import and zfs-mount to sysinit level to make the file system accessible during boot or shutdown process.

systemd

Enable the service so it is automatically started at boot time:

root #systemctl enable zfs.targetTo manually start the daemon:

root #systemctl start zfs.targetIn order to mount zfs pools automatically on boot, the following services and targets need to be enabled:

root #systemctl enable zfs-import-cache

root #systemctl enable zfs-mount

root #systemctl enable zfs-import.target

Kernel

sys-fs/zfs requires Zlib kernel support (module or builtin).

Cryptographic API --->

Compression --->

<*> Deflate

Module

The kernel module must be rebuilt whenever the kernel is.

When installing the kernel module, you must always make sure to choose a stable version. You can the latest stable version along with the kernel version here and also see if portage has that specific kernel version

Do not install the latest version of the module. The chances of it not working are higher than using a stable module. You can install certain versions of a package

root #emerge --ask sys-fs/zfs-kmod-x.x.xYou should also add some files in the package.mask folder that mask certain versions of zfs-kmod and zfs. It will avoid other versions of those two packages from being pulled in. Furthermore, sys-fs/zfs also must match the same version as zfs-kmod.

If using an initramfs that was generated by either dracut or genkernel, please (re)generate it after (re)compiling the module. If you're using a custom initramfs, follow the next section:

Custom INITRAMFS

this section does not apply to dracut, ugrd or genkernel users

Whenever you upgrade to a new kernel, you must always create a folder for it in your initramfs folder.

root #mkdir -vp /usr/src/initramfs/lib/modules/$(uname -r)Copy over the binary files so you can modprobe somewhere in your init:

root #cp -Prv /lib/modules/$(uname -r)/extra/* /usr/src/initramfs/lib/modules/$(uname -r)/extra/You will also need all of these libraries in order to boot properly:

root # lddtree --copy-to-tree /usr/src/initramfs /sbin/zfs root # lddtree --copy-to-tree /usr/src/initramfs /sbin/zpool root # lddtree --copy-to-tree /usr/src/initramfs /sbin/zed root # lddtree --copy-to-tree /usr/src/initramfs /sbin/zgenhostidroot # lddtree --copy-to-tree /usr/src/initramfs /sbin/zvol_wait If have multiple kernel folders inside of modules, you can use the -not -path with the find command. This will exclude specific folders from being included.

Here is an example of me creating a initramfs for 6.6.2 and excluding 6.1.118 from it:

root #cd /usr/src/initramfs

root #find . -not -path "./lib/modules/6.1.118/*" -not -path "./lib/modules/6.1.118" -print0 | cpio --null --create --verbose --format=newc | gzip --best > gzip -9 > /boot/initramfs-custom.img

Now that the initramfs has been created, update your boot-loader!

Advanced

For a collection of tips on some advanced ways to improve edge case needs for ZFS, then please take a look at the following sub article:

Usage

ZFS includes already all programs to manage the hardware and the file systems, there are no additional tools needed.

Preparation

ZFS supports the use of either block devices or files. Administration is the same in both cases, but for production use, the ZFS developers recommend the use of block devices (preferably whole disks). Users can use whichever way is most convenient while experimenting with the various commands detailed here.

To take full advantage of block devices on Advanced Format disks (i.e. drives with 4K sectors), users are highly recommended to read the ZFS on Linux FAQ before creating the pools.

A ZFS storage pool (or zpool) is an aggregate of one or several virtual devices (or vdevs each composed of one or several underlying physical devices grouped together in a certain geometry. Three very important rules:

- The zpool being striped across vdevs, if one of the latter becomes unavailable, the whole zpool also becomes unavailable

- Once a dev has been created, its geometry is set in the stone and cannot be modified. the only way to reconfigure a vdev is to destroy and recreate it

- To able to repair a damaged zpool some level of redundancy is required in each of the underlying vdevs

Hardware considerations

- ZFS needs to directly see and steer the physical devices supporting a zpool (it has its own I/O scheduler). It is highly unadvised to use hardware RAID, even multiple single RAID-0 virtual disks because that approach mitigates the benefits brought by ZFS. Always use JBODs. Some controllers propose an option, some other like older LSI HBAs have to be re-flashed with a specific firmware ("IT mode firmware" in the case of LSI) to be able to do JBOD. Unfortunately some RAID controllers only propose RAID levels without any alternative and are unsuitable for ZFS.

- ZFS can be extremely I/O intensive:

- A very popular option in NAS boxes is to use second-hand SAS HBAs attached to several SATA "rusty" platter drives (on a bigger scale, using near-line SATA drives is recommended)

- If a zpool is created with NVMe modules, ensure those latter are not DRAM-less (check the datasheet). Two reasons for that: avoid a significant bottleneck but also avoid hardware crashes as some of those DRAM-less modules tends to freeze when being overwhelmed by I/O requests[1].

- Do not use the so called SMR (Shingled Magnetic Drives) drives in zpools as those are very slow especially at writing and can timeout on a zpool resilvering thus preventing a recovery. Always use CMR drives with ZFS.

- Consider using power loss protected NVMe/SSDs (i.e with on-board capacitors) unless the storage is protected by an uninterruptible power supply (UPS) or has a very reliable power source

- SATA port multipliers should be avoided, unless there is no other option, because of major bottlenecks multiplexing traffic between the drives connected effectively dividing available bandwidth. I/O latency also increases.

- ZFS is able to use vectorized SSE/AVX instructions found on X86/64 processors to speed up checksums calculations and automatically selects the fastest implementation. It is possible (under Linux) to see the microbenchmarks results in /proc/spl/kstat/zfs/fletcher_4_bench and vdev_raidz_bench. Example:

root #cat /proc/spl/kstat/zfs/fletcher_4_bench0 0 0x01 -1 0 2959173570 60832614852064 implementation native byteswap scalar 11346741272 8813266429 superscalar 13447646071 11813021922 superscalar4 15452304506 13531748022 sse2 24205728004 11368002233 ssse3 24301258139 22109806540 avx2 43631210878 41199229117 avx512f 47804283343 19883061560 avx512bw 47806962319 42246291561 fastest avx512bw avx512bw

Redundancy considerations

Although a zpool can have several vdevs of different geometry and capacity each, all the drives within a givenvdev must be of same type and characteristics most notably same capacity and same sector size. Mixing SSD and "rusty" platters drives within a same vdev is not recommended because of performance discrepancies.

Just like RAID, ZFS offers several options to create a redundant (or not) vdev which are:

- striped ("RAID-0"): The fatest option but offers no protection against a drive loss. If a drives dies, not only the vdev dies but also the whole zpool dies as well (remember: a zpool is always a striped thing).

- mirror ("RAID 1") : one drive is the mirror of the other. Usually two drives are used but it is possible to have a 3-faces (or more) mirror at the cost of space use efficiency. Tolerates the loss of one drive only (or two for 3-face mirror)

- raidz1 or also raidz ("RAID-5"): a single parity is used and distributed in the vdev and thus that latter does only tolerate the loss of a single drive. The total vdev capacity is equal to the sum all individual drives capacity (minus the capacity of one drive).

- raidz2 ("RAID-6"): same concept as raidz1 but with two parities. The total vdev capacity is equal to the sum all individual drives capacity (minus the capacity of two drives). Tolerates the loss of two drives.

- raidz3: same concept as raidz1 but with three parities. The total vdev capacity is equal to the sum all individual drives capacity (minus the capacity of three drives). Tolerates the loss of three drives. Use for extreme data security.

Preparing the storage pool

The recommended way to use ZFS is to use physical devices. It is not necessary to take about a device partitioning as "blank" devices will be automatically handled by ZFS.

As an alternative for the rest of explanations, files lying of the top of an existing filesystem can be used if no physical devices are available. This kind approach, suitable for experimentation, must however never be used on production systems.

The following commands create three 2GB sparse image files in /var/lib/zfs_img used as hard drives. This uses at most 8GB disk space, but in practice will use very little because only written areas are allocated:

root #mkdir /var/lib/zfs_img

root #truncate -s 2G /var/lib/zfs_img/zfs0.img

root #truncate -s 2G /var/lib/zfs_img/zfs1.img

root #truncate -s 2G /var/lib/zfs_img/zfs2.img

root #truncate -s 2G /var/lib/zfs_img/zfs3.imgOn pool export, all of the files will be released and the folder /var/lib/zfs_img can be deleted.

If a ramdisk is more convenient the equivalent of above is:

root #modprobe brd rd_nr=4 rd_size=1953125

root #ls -l /dev/ram*

brw-rw---- 1 root disk 1, 0 Apr 29 12:32 /dev/ram0 brw-rw---- 1 root disk 1, 1 Apr 29 12:32 /dev/ram1 brw-rw---- 1 root disk 1, 2 Apr 29 12:32 /dev/ram2 brw-rw---- 1 root disk 1, 2 Apr 29 12:32 /dev/ram3

That latter solution involves having activated CONFIG_BLK_DEV_RAM=m in the Linux kernel configuration. As a last resort, loopback devices combined to files stored in a tmpfs filesystem can be used. The first part is to create the tmpfs where the raw images files will be put:

root #mkdir /var/lib/zfs_img

Then create the raw images files:

root #dd if=/dev/null of=/var/lib/zfs0.img bs=1024 count=2097153 seek=2097152

root #dd if=/dev/null of=/var/lib/zfs1.img bs=1024 count=2097153 seek=2097152

root #dd if=/dev/null of=/var/lib/zfs2.img bs=1024 count=2097153 seek=2097152

root #dd if=/dev/null of=/var/lib/zfs3.img bs=1024 count=2097153 seek=2097152Then assign a loopback device to each file created above:

root #losetup /dev/loop0 /var/lib/zfs_img/zfs0.img

root #losetup /dev/loop1 /var/lib/zfs_img/zfs1.img

root #losetup /dev/loop2 /var/lib/zfs_img/zfs2.img

root #losetup /dev/loop3 /var/lib/zfs_img/zfs3.img

root #losetup -a

/dev/loop1: [0067]:3 (/tmp/zfs_img/zfs1.img) /dev/loop2: [0067]:4 (/tmp/zfs_img/zfs2.img) /dev/loop0: [0067]:2 (/tmp/zfs_img/zfs0.img) /dev/loop3: [0067]:5 (/tmp/zfs_img/zfs3.img)

Zpools

Every ZFS story starts with the program /usr/sbin/zpool which is used to create and tweak ZFS storage pools (zpools). A zpool is a kind of logical storage space, grouping one or several vdevs together. That logical storage can contain both of:

- datasets : A set of files and directories mounted in the VFS, just like any traditional filesystem like xfs or ext4

- zvolumes (or zvols): virtual volumes which can be accessed as virtual block devices and used just like any other physical hard drive on the machine lying under /dev.

Once a zpool has been created, it is possible to take point-in-time pictures (snapshots) of its different zvolumes and datasets. Those snapshots can be browsed or even rolled-back to restore the content as it was back in time. Another paradigm with ZFS is that a zpool, a dataset and a zvolume can be governed by acting on several attributes: Encryption, data compression, usage quotas and many other aspects are just examples on what can be tweaked by attributes.

Remember: a zpool is striped across its vdevs so even a single loss implies the loss of the whole zpool. The redundancy happens at the vdev level, not the zpool level. When losing a zpool, the only option is to rebuild it from scratch and restore its contents from backups. Taking snapshots is not sufficient to ensure a crash recovery, those have to be sent elsewhere. More on this in following sections.

ZFS is more than a simple filesystem (taken the common accepted sense) as it is a hybrid between a filesystem and a volume manager. Discussing that aspect being out scope, just keep in mind that and use that commonly used term in the rest of this article.

Creating a zpool

The general syntax is:

root # zpool create <poolname> [{striped,mirror,raidz,raidz2,raidz3}] /dev/blockdevice1 /dev/blockdevice2 ... [{striped,mirror,raidz,raidz2,raidz3}] /dev/blockdeviceA /dev/blockdeviceB ... [{striped,mirror,raidz,raidz2,raidz3}] /dev/blockdeviceX /dev/blockdeviceY ... If neither

striped,mirror,raidz,raidz2,raidz3 are specified, striped is assumed by default.Some examples:

zpool create zfs_test /dev/sda: creates a zpool composed of a single vdev containing only one physical device (no redundancy at all!)zpool create zfs_test /dev/sda /dev/sdb: creates a zpool composed of a single vdev containing two striped physical devices (no redundancy at all!)zpool create zfs_test mirror /dev/sda /dev/sdb: creates a zpool composed of a single vdev containing two physical devices in mirrorzpool create zfs_test mirror /dev/sda /dev/sdb mirror /dev/sdc /dev/sdd: creates a striped mirror zpool composed of a two vdevs each containing two physical devices in mirror (one device in each vdev can die without losing the zpool).zpool create zfs_test raidz /dev/sda /dev/sdb /dev/sdc: creates a zpool composed of a single vdev containing three physical devices in RAID-Z1 (one loss can be tolerated)

There are some other keywords for the vdev types like

spare, cache, slog, metadata or draid (distributed RAID).If files rather than block devices are used, just substitute the block device path by the full path of the files used. So:

zpool create zfs_test /dev/sdabecomeszpool create zfs_test /var/lib/zfs_img/zfs0.imgzpool create zfs_test /dev/sda /dev/sdbbecomeszpool create zfs_test /var/lib/zfs_img/zfs0.img /var/lib/zfs_img/zfs1.img

and so on.

Once a zpool has been created, whatever its architecture is, a dataset having the same name is also automatically created and mounted directly under the VFS root:

root #mount | grep zpool_test

zpool_test on /zpool_test type zfs (rw,xattr,noacl)

For the moment, that dataset is an empty eggshell containing no data.

Displaying zpools statistics and status

The list of the currently known zpools and some usage statistics can be checked by:

root #zpool listNAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT rpool 5.45T 991G 4.49T - - 1% 17% 1.00x ONLINE - zpool_test 99.5G 456K 99.5G - - 0% 0% 1.00x ONLINE -

Some brief explanations on what is displayed:

- NAME: The name of the pool

- SIZE: The total size of the pool. In the case of RAID-Z{1,3} vdevs, that size takes into account the parity. As

zpool_testis a mirrored vdev, the reported size here is the size of a single disk. - ALLOC: The current size of stored data (same remark as above for RAID-Z{1,3} vdevs)

- FREE: The available free space (FREE = SIZE - ALLOC)

- CKPOINT: The size taken by an eventual checkpoint (i.e. a global zpool snapshot)

- EXPANDSZ: The space available to expand the zpool. Usually nothing is listed here but on particular scenarios

- FRAG: Free space fragmentation expressed in percents. Fragmentation is not an issue with ZFS as it has been designed to deal with that and there is currently no way to defragment a zpool but recreating it from scratch and restoring its data from backups.

- CAP: Used space expressed as percentage

- DEDUP: Data deduplication factor. Always

1.00xunless a zpool had data de-duplication active. - HEALTH: Current pool state, "ONLINE" meaning the pool is in an optimal (i.e. non-degraded or, worse, unavailable) state

- ALTROOT: Alternate root path (not persistent across reboots). This is handy in the case a zpool has to be handled from a live media environment: all datasets are mounted relatively to this path which can be set via the

-Roption of thezpool importcommand.

It is also possible to have bit more details using the option -v:

root #zpool list -vNAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

rpool 5.45T 991G 4.49T - - 1% 17% 1.00x ONLINE -

raidz1-0 5.45T 991G 4.49T - - 1% 17.8% - ONLINE

/dev/nvme1n1p1 1.82T - - - - - - - ONLINE

/dev/nvme2n1p1 1.82T - - - - - - - ONLINE

/dev/nvme0n1p1 1.82T - - - - - - - ONLINE

zpool_test 99.5G 540K 99.5G - - 0% 0% 1.00x ONLINE -

mirror-0 99.5G 540K 99.5G - - 0% 0.00% - ONLINE

/dev/sdd1 99.9G - - - - - - - ONLINE

/dev/sdg1 99.9G - - - - - - - ONLINE

Another very useful command is zpool status, which provides a detailed report on what is going on on the zpools and if something has to be fixed:

root #zpool statuszpool status

pool: rpool

state: ONLINE

scan: scrub repaired 0B in 00:03:18 with 0 errors on Sat Apr 29 15:11:19 2023

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

/dev/nvme1n1p1 ONLINE 0 0 0

/dev/nvme2n1p1 ONLINE 0 0 0

/dev/nvme0n1p1 ONLINE 0 0 0

errors: No known data errors

pool: zpool_test

state: ONLINE

status: One or more devices are configured to use a non-native block size.

Expect reduced performance.

action: Replace affected devices with devices that support the

configured block size, or migrate data to a properly configured

pool.

config:

NAME STATE READ WRITE CKSUM

zpool_test ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

/dev/sdd1 ONLINE 0 0 0 block size: 4096B configured, 65536B native

/dev/sdg1 ONLINE 0 0 0 block size: 4096B configured, 65536B native

errors: No known data errors

Import/Export zpool

Refer to the

zfs-import(8) manpage for detailed descriptions of all available options.A zpool does not magically appear, it has to be imported to be known and usable by the system. Once a zpool is imported, the following happens:

- All datasets, including the root dataset, are mounted in the VFS unless the mountpoint attribute tells otherwise (i.e.

mountpoint=none). If the datasets are nested and have to be mounted in a hierarchical manner, ZFS handles this case automatically. - Block devices entries are created for zvolumes under /dev/zvol/<poolname>

To search for and list all available (and not already imported) zpools in the system issue the command:

root #zpool import

pool: zpool_test

id: 5283656651466890926

state: ONLINE

status: One or more devices are configured to use a non-native block size.

Expect reduced performance.

action: The pool can be imported using its name or numeric identifier.

config:

zpool_test ONLINE

mirror-0 ONLINE

/dev/sdd1 ONLINE

/dev/sdg1 ONLINE

To effectively import a zpool just name it and ZFS will automatically probe all attached drives for it:

root #zpool import zfs_test

or use the -a option to import all available zpools:

root #zpool import -a

If all the vdevs composing a zpool are available, either in a clean or in a degraded (but acceptable) state, the import should complete successfully. In the case of a single vdev is missing, the zpool cannot be imported, thus has to be restored from a backup.

Time to demonstrate the famous ALTROOT invoked earlier: suppose zfs_test is to be imported but with all of its mountable datasets relatively to /mnt/gentoo rather than the VFS root. This is achieved like this:

root #zpool import -R /mnt/gentoo zpool_test

root #zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT rpool 5.45T 991G 4.49T - - 1% 17% 1.00x ONLINE - zpool_test 99.5G 948K 99.5G - - 0% 0% 1.00x ONLINE /mnt/gentoo

Confirmed by:

root #mount | grep zpool_test

zpool_test on /mnt/gentoo/zpool_test type zfs (rw,xattr,noacl)

The reverse operation of importing a zpool is called: exporting the pool. If nothing uses the pool (i.e no open files on datasets and no zvolume in use), the zpool can be exported simply with:

root #zpool export zpool_test

At this time, all mounted datasets are unmounted from the VFS and all block device entries corresponding to zvolumes are cleared from /dev.

Editing Zpools properties

A zpool has several attributes also named properties. While some can be changed and are "knobs" to govern aspects like data compression or encryption, some others are intrinsic and can only be read. Two options of the zpool command can be used to interact with properties:

zpool get propertyname zpoolnameto view propertyname (The special all property name allows to view everything)zpool set propertyname=propertyvalue zpoolnameto set propertyname topropertyvalue

root #zpool get all zpool_test

NAME PROPERTY VALUE SOURCE zpool_test size 99.5G - zpool_test capacity 0% - zpool_test altroot - default zpool_test health ONLINE - zpool_test guid 14297858677399026207 - zpool_test version - default zpool_test bootfs - default zpool_test delegation on default zpool_test autoreplace off default zpool_test cachefile - default zpool_test failmode wait default zpool_test listsnapshots off default zpool_test autoexpand off default zpool_test dedupratio 1.00x - zpool_test free 99.5G - zpool_test allocated 6.56M - zpool_test readonly off - zpool_test ashift 16 local zpool_test comment - default zpool_test expandsize - - zpool_test freeing 0 - zpool_test fragmentation 0% - zpool_test leaked 0 - zpool_test multihost off default zpool_test checkpoint - - zpool_test load_guid 6528179821128937184 - zpool_test autotrim off default zpool_test compatibility off default zpool_test feature@async_destroy enabled local zpool_test feature@empty_bpobj enabled local zpool_test feature@lz4_compress active local zpool_test feature@multi_vdev_crash_dump enabled local zpool_test feature@spacemap_histogram active local zpool_test feature@enabled_txg active local zpool_test feature@hole_birth active local zpool_test feature@extensible_dataset active local zpool_test feature@embedded_data active local zpool_test feature@bookmarks enabled local zpool_test feature@filesystem_limits enabled local zpool_test feature@large_blocks enabled local zpool_test feature@large_dnode enabled local zpool_test feature@sha512 enabled local zpool_test feature@skein enabled local zpool_test feature@edonr enabled local zpool_test feature@userobj_accounting active local zpool_test feature@encryption enabled local zpool_test feature@project_quota active local zpool_test feature@device_removal enabled local zpool_test feature@obsolete_counts enabled local zpool_test feature@zpool_checkpoint enabled local zpool_test feature@spacemap_v2 active local zpool_test feature@allocation_classes enabled local zpool_test feature@resilver_defer enabled local zpool_test feature@bookmark_v2 enabled local zpool_test feature@redaction_bookmarks enabled local zpool_test feature@redacted_datasets enabled local zpool_test feature@bookmark_written enabled local zpool_test feature@log_spacemap active local zpool_test feature@livelist enabled local zpool_test feature@device_rebuild enabled local zpool_test feature@zstd_compress enabled local zpool_test feature@draid enabled local

The output follows a tabular format where the last two columns are:

VALUE: The current value for the property. For a boolean property can beon/off, for zpool features can beactive(enabled and currently in use),enabled(enabled but currently not in use) anddisabled(disabled, not in use). Depending on the system version of OpenZFS and the enabled zpool features a zpool has, that latter can be imported or not. More explanation on this in a moment.SOURCE: mentions if the property value has been overridden (local) or if the default value is used (default). In the case a property cannot be changed and can only be read, a dash is displayed.

While not all will be described, something immediately catches the eye: a couple of properties have names starting with feature@. What is that? History[2].

The legacy zpools version numbering schema has been abandoned in 2012 (shortly after Oracle discontinued Open Solaris and continued the development of ZFS behind closed-doors) and switched to features flags (the zpool version number was also set to 5000 and never changed since), hence the various feature@ figuring in the command output above.

The lastest supported legacy version number a ZFS implementation supports can be checked with

zpool upgrade -v, with OpenZFS/ZFS the latest legacy supported version is 28.At the time the version numbering scheme was in use, when a zpool was created, the operating system tagged the zpool with the highest supported zpool version number[3]. Those were numbered from 1 (ZFS initial release) to 49 which is supported by Solaris 11.4 SRU 51. As the zpool version number always increased between Solaris releases, so: a newer Solaris version can always import a zpool coming from an older Solaris version. There is however a catch: the zpool must have its version number upgraded (this is a manual operation) to get the benefits of the various news ZFS features and enhancements brought by a newer Solaris version. Once that zpool version number has been upgraded, older Solaris versions can no longer import the zpool. E.g. a zpool created under Solaris 10 9/10 (supporting zpool versions up to 22) can be imported under Solaris 10 1/13 (supporting zpool versions up to 32) and even be imported again on Solaris 10 1/13 after that, at least as long as the zpool version number have not been upgraded under Solaris 10 1/13 (would tag the zpool at version 32).

The same remains true with the features flags: if the zpool has a feature flag enabled but not supported by the ZFS version in use on the system, the zpool cannot be imported. Likewise with the (legacy) version number system: to get the benefits of the new features flags provided by later OpenZFS/ZFS releases, the zpool must be upgraded (manual operation) to be able to support those new feature flags. Once this has been done, older OpenZFS/ZFS releases can no longer import the zpool.

For zpool import purposes only,

disabled or enabled feature flag settings do not matter. When active, the read-only/not at all rules from the Feature Flags chart apply.Feature flags can be tricky: some immediately become

active once enabled, some never go back to disabled once enabled and some never go back to enabled once active. See zpool-features (7) for more details. For backwards compatibility, consider simply enabling selectively as needed.If the zpool lies on SSD or NVMe drives, it might be convenient to enable the autotrim feature to let ZFS handles the "house keeping" on its own:

root #zpool get autotrim zpool_test

NAME PROPERTY VALUE SOURCE zpool_test autotrim off default

root #zpool set autotrim=on zpool_test

root #zpool get autotrim zpool_test

NAME PROPERTY VALUE SOURCE zpool_test autotrim on local

Another useful feature is data compression. ZFS uses LZ4 by default and if switching to ZSTD is desired, a naïve approach would be:

root #zpool set feature@zstd_compress=active zpool_test

cannot set property for 'zpool_test': property 'feature@zstd_compress' can only be set to 'enabled' or 'disabled|output=<pre>

Indeed, even that property support is enabled on the zpool, activating it is not done by just setting the value that way but by changing the value of a property at a dataset level or a zvolume level via some "magic command". Once this "magic command" has been run, the ZSTD compression algorithm becomes effectively in use (active):

root #zpool get feature@zstd_compress zpool_test

NAME PROPERTY VALUE SOURCE zpool_test feature@zstd_compress active local

The change applies only to newly written data, any existing data on the zpool is leaved untouched.

As invoked before, a zpool has to be upgraded to enable the usage of the new features provided by newer OpenZFS/ZFS versions (or whatever ZFS implementation used by the operating system). When an upgrade is due, zpool status will report this and invite to run zpool upgrade (the full command in this case is zpool upgrade zpool_test):

root #zpool status zpool_test

pool: zpool_test

state: ONLINE

status: Some supported and requested features are not enabled on the pool.

The pool can still be used, but some features are unavailable.

action: Enable all features using 'zpool upgrade'. Once this is done,

the pool may no longer be accessible by software that does not support

the features. See zpool-features(7) for details.

config:

(...)

Once the zpool upgrade has been done:

root #zpool status zpool_test

pool: zpool_test state: ONLINE config: (...)

To display the list of currently supported feature flags by the ZFS version in use by the operating system:

root #zpool upgrade -vThis system supports ZFS pool feature flags.

The following features are supported:

FEAT DESCRIPTION

-------------------------------------------------------------

async_destroy (read-only compatible)

Destroy filesystems asynchronously.

empty_bpobj (read-only compatible)

Snapshots use less space.

lz4_compress

LZ4 compression algorithm support.

multi_vdev_crash_dump

Crash dumps to multiple vdev pools.

spacemap_histogram (read-only compatible)

Spacemaps maintain space histograms.

enabled_txg (read-only compatible)

Record txg at which a feature is enabled

hole_birth

Retain hole birth txg for more precise zfs send

extensible_dataset

Enhanced dataset functionality, used by other features.

embedded_data

Blocks which compress very well use even less space.

bookmarks (read-only compatible)

"zfs bookmark" command

filesystem_limits (read-only compatible)

Filesystem and snapshot limits.

large_blocks

Support for blocks larger than 128KB.

large_dnode

Variable on-disk size of dnodes.

sha512

SHA-512/256 hash algorithm.

skein

Skein hash algorithm.

edonr

Edon-R hash algorithm.

userobj_accounting (read-only compatible)

User/Group object accounting.

encryption

Support for dataset level encryption

project_quota (read-only compatible)

space/object accounting based on project ID.

device_removal

Top-level vdevs can be removed, reducing logical pool size.

obsolete_counts (read-only compatible)

Reduce memory used by removed devices when the blocks are freed or remapped.

zpool_checkpoint (read-only compatible)

Pool state can be checkpointed, allowing rewind later.

spacemap_v2 (read-only compatible)

Space maps representing large segments are more efficient.

allocation_classes (read-only compatible)

Support for separate allocation classes.

resilver_defer (read-only compatible)

Support for deferring new resilvers when one is already running.

bookmark_v2

Support for larger bookmarks

redaction_bookmarks

Support for bookmarks which store redaction lists for zfs redacted send/recv.

redacted_datasets

Support for redacted datasets, produced by receiving a redacted zfs send stream.

bookmark_written

Additional accounting, enabling the written#<bookmark> property(space written since a bookmark), and estimates of send stream sizes for incrementals from bookmarks.

log_spacemap (read-only compatible)

Log metaslab changes on a single spacemap and flush them periodically.

livelist (read-only compatible)

Improved clone deletion performance.

device_rebuild (read-only compatible)

Support for sequential device rebuilds

zstd_compress

zstd compression algorithm support.

draid

Support for distributed spare RAID

The following legacy versions are also supported:

VER DESCRIPTION

--- --------------------------------------------------------

1 Initial ZFS version

2 Ditto blocks (replicated metadata)

3 Hot spares and double parity RAID-Z

4 zpool history

5 Compression using the gzip algorithm

6 bootfs pool property

7 Separate intent log devices

8 Delegated administration

9 refquota and refreservation properties

10 Cache devices

11 Improved scrub performance

12 Snapshot properties

13 snapused property

14 passthrough-x aclinherit

15 user/group space accounting

16 stmf property support

17 Triple-parity RAID-Z

18 Snapshot user holds

19 Log device removal

20 Compression using zle (zero-length encoding)

21 Deduplication

22 Received properties

23 Slim ZIL

24 System attributes

25 Improved scrub stats

26 Improved snapshot deletion performance

27 Improved snapshot creation performance

28 Multiple vdev replacements

Zpool failures and spare vdevs

Hardware is not perfect and sometimes a drive can fail for some reason or the data on it can become corrupt. ZFS has countermeasures to detect corruption and prevent even double-flipped bits errors. If the underlying vdevs are redundant, damaged data can be repaired as if nothing had ever happened.

Remember that zpools are striped across all of the vdev and two scenarios can happen when a device fails:

- Either enough redundancy exists and the zpool is still available but under a "DEGRADED" state

- Either there is not enough redundancy exists and the zpool becomes "SUSPENDED" (at this point data must be restored from a backup)

A zpool status reports what the situation is:

root #zpool status zpool_test

pool: zpool_test

state: DEGRADED

status: One or more devices could not be used because the label is missing or

invalid. Sufficient replicas exist for the pool to continue

functioning in a degraded state.

action: Replace the device using 'zpool replace'.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-4J

config:

NAME STATE READ WRITE CKSUM

zpool_test DEGRADED 0 0 0

mirror-0 DEGRADED 0 0 0

/dev/sde1 UNAVAIL 3 80 0

/dev/sdh1 ONLINE 0 0 0

To simulate a drive failure with a block device issue

echo offline > /sys/block/<block device>/device/state (echo running > /sys/block/<block device>/device/state will bring the drive back to online state)The defective drive (/dev/sde) has to be physically replaced by another one (here /dev/sdg). That task is accomplished by first off-lining the failed drive from the zpool:

root #zpool offline zpool_test /dev/sde

root #zpool status zpool_test

pool: zpool_test

state: DEGRADED

status: One or more devices has experienced an unrecoverable error. An

attempt was made to correct the error. Applications are unaffected.

action: Determine if the device needs to be replaced, and clear the errors

using 'zpool clear' or replace the device with 'zpool replace'.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-9P

config:

NAME STATE READ WRITE CKSUM

zpool_test DEGRADED 0 0 0

mirror-0 DEGRADED 0 0 0

/dev/sde1 OFFLINE 3 80 0

/dev/sdh1 ONLINE 0 0 0

errors: No known data errors

Then proceeding with the replacement (ZFS will partition the new drive automatically) :

root #zpool replace zpool_test /dev/sde /dev/sdg

root #zpool status zpool_test

pool: zpool_test

state: ONLINE

scan: resilvered 28.9M in 00:00:01 with 0 errors on Sat May 6 11:12:55 2023

config:

NAME STATE READ WRITE CKSUM

zpool_test ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

/dev/sdg1 ONLINE 0 0 0

/dev/sdh1 ONLINE 0 0 0

Once the drive has been replaced, ZFS starts a rebuilding process in background known as resilvering which can take several hours or even days depending on the amount of data stored on the zpool. Here the amount is very small so the revilvering process just takes a couple of seconds. On large zpools the resilvering process duration can even be prohibitive and this is where draid kicks-in (a totally different beast than raidz), more on this later.

Rather than being a manual operation, what if the zpool can have hot-spare drives that can automatically replace the failed ones? This is exactly the reason of having a special vdev known as... spare. There are two ways of having spare vdevs in a zpool: whenever the zpool is created or added on the fly to an existing zpool. Replacement hot-spare drives should be identical to faulty drives.

To add a spare vdev, simply add it by issuing a zpool add command (here again, ZFS will partition the hot-spare drive on its own). Once added to a zpool, the hot-spare remains in stand-by until a drive failure happens:

root #zpool add zpool_test spare /dev/sdd

root #zpool status zpool_test

pool: zpool_test

state: ONLINE

scan: resilvered 28.9M in 00:00:01 with 0 errors on Sat May 6 11:12:55 2023

config:

NAME STATE READ WRITE CKSUM

zpool_test ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

/dev/sdg1 ONLINE 0 0 0

/dev/sdh1 ONLINE 0 0 0

spares

/dev/sdd1 AVAIL

errors: No known data errors

At this point the hot-spare is available (AVAIL). If for some reason /dev/sdh (or /dev/sdg would fail, /dev/sdd will immediately take over the failed drive (so it becomes INUSE) thus triggering a zpool resilvering in background with no downtime for the zpool:

root #zpool status

pool: zpool_test

state: DEGRADED

status: One or more devices could not be used because the label is missing or

invalid. Sufficient replicas exist for the pool to continue

functioning in a degraded state.

action: Replace the device using 'zpool replace'.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-4J

scan: resilvered 40.7M in 00:00:01 with 0 errors on Sat May 6 11:39:28 2023

config:

NAME STATE READ WRITE CKSUM

zpool_test DEGRADED 0 0 0

mirror-0 DEGRADED 0 0 0

spare-0 DEGRADED 0 0 0

/dev/sdg1 UNAVAIL 3 83 0

/dev/sdd1 ONLINE 0 0 0

/dev/sdh1 ONLINE 0 0 0

spares

/dev/sdd1 INUSE currently in use

The original drive will automatically get removed asynchronously. If this is not the case, it may need to be manually detached with the zpool detach command:

root #zpool detach zpool_test /dev/sdg

root #zpool status

pool: zpool_test

state: ONLINE

scan: resilvered 40.7M in 00:00:01 with 0 errors on Sat May 6 11:39:28 2023

config:

NAME STATE READ WRITE CKSUM

zpool_test ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

/dev/sdd1 ONLINE 0 0 0

/dev/sdh1 ONLINE 0 0 0

errors: No known data errors

Once the failed drive has been physically replaced, it can be re-added to the zpool as a new hot-spare. It is also possible to share the same hot-spare between more than one zpool.

Having a mirrored vdev under the hand in the present example: it is also possible to add a third actively used drive to it rather than using a hot-spare drive. This is accomplished by attaching the new drive (zpool attach) to one of the existing drives composing the mirrored vdev:

root #zpool attach zpool_test /dev/sdh /dev/sdg

root #zpool status zpool_test

pool: zpool_test

state: ONLINE

scan: resilvered 54.4M in 00:00:00 with 0 errors on Sat May 6 11:55:42 2023

config:

NAME STATE READ WRITE CKSUM

zpool_test ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

/dev/sdd1 ONLINE 0 0 0

/dev/sdh1 ONLINE 0 0 0

/dev/sdg1 ONLINE 0 0 0

errors: No known data errors

zpool attach zpool_test /dev/sdd /dev/sdg would have given the same result.It is a good practice to periodically scrub a zpool to ensure what it contains is "healthy" (if something is corrupt and if the zpool have enough redundancy the damage will be repaired). To start a manual scrub:

root #zpool scrub zpool_test

root #zpool status zpool_test

pool: zpool_test

state: ONLINE

scan: scrub repaired 0B in 00:00:01 with 0 errors on Sat May 6 12:06:18 2023

config:

NAME STATE READ WRITE CKSUM

zpool_test ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

/dev/sdd1 ONLINE 0 0 0

/dev/sdh1 ONLINE 0 0 0

/dev/sdg1 ONLINE 0 0 0

errors: No known data errors

In the case of something corrupt is detected the output of the above command would be:

root #zpool scrub zpool_test

root #zpool status zpool_test

pool: zpool_test

state: ONLINE

status: One or more devices has experienced an unrecoverable error. An

attempt was made to correct the error. Applications are unaffected.

action: Determine if the device needs to be replaced, and clear the errors

using 'zpool clear' or replace the device with 'zpool replace'.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-9P

scan: scrub repaired 256K in 00:00:00 with 0 errors on Sat May 6 12:09:22 2023

config:

NAME STATE READ WRITE CKSUM

zpool_test ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

/dev/sdd1 ONLINE 0 0 4

/dev/sdh1 ONLINE 0 0 0

/dev/sdg1 ONLINE 0 0 0

errors: No known data errors

To corrupt a drive contents a command like

dd if=/dev/urandom of=/dev/sdd1 can be issued (give it a few seconds to corrupt enough data).Once the damage has been repaired, issue a zpool clear command as suggested:

root #zpool clear zpool_test

root #zpool status zpool_test

pool: zpool_test

state: ONLINE

scan: scrub repaired 256K in 00:00:00 with 0 errors on Sat May 6 12:09:22 2023

config:

NAME STATE READ WRITE CKSUM

zpool_test ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

/dev/sdd1 ONLINE 0 0 0

/dev/sdh1 ONLINE 0 0 0

/dev/sdg1 ONLINE 0 0 0

errors: No known data errors

To detach a drive from a three-faced mirror vdev, just use

zpool detach as seen before. It is enven to also detach a drive from a two-faced mirror at the penalty of having no more redundancy.Speaking about three-faced mirrors, having only one of the three drives healthy still allows a functional zpool:

root #zpool scrub zpool_test

root #zpool status zpool_test

pool: zpool_test

state: ONLINE

status: One or more devices has experienced an unrecoverable error. An

attempt was made to correct the error. Applications are unaffected.

action: Determine if the device needs to be replaced, and clear the errors

using 'zpool clear' or replace the device with 'zpool replace'.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-9P

scan: scrub in progress since Sat May 6 13:43:09 2023

5.47G scanned at 510M/s, 466M issued at 42.3M/s, 5.47G total

0B repaired, 8.31% done, 00:02:01 to go

config:

NAME STATE READ WRITE CKSUM

zpool_test ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

/dev/sdd1 ONLINE 0 0 0

/dev/sdh1 ONLINE 0 0 68.9K

/dev/sdg1 ONLINE 0 0 68.9K

errors: No known data errors

Destroying and restoring a zpool

Once a zpool is no longer needed, it can be destroyed with zpool destroy. If the zpool is not empty and contains zvolumes and/or datasets, the -r option is required. There is no need to export the zpool first as ZFS will handles this automatically.

If the zpool has just been destroyed and all of its vdevs are still intact, it is possible to re-import it by issuing a zpool import this time using the option -D:

root #zpool destroy zpool_test

root #zpool import -D

pool: zpool_test

id: 628141733996329543

state: ONLINE (DESTROYED)

action: The pool can be imported using its name or numeric identifier.

config:

zpool_test ONLINE

mirror-0 ONLINE

/dev/sdd1 ONLINE

/dev/sdh1 ONLINE

/dev/sdg1 ONLINE

Importing the destroyed zpool that way also "undestroy" it:

root #zpool list zpool_test

cannot open 'zpool_test': no such pool

root #zpool import -D zpool_test

root #zpool list zpool_test

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT zpool_test 99.5G 5.48G 94.0G - - 0% 5% 1.00x ONLINE -

Technically speaking this operation simply consist of tagging the zpool as being in a "destroyed state" and no data is wiped from the underlying vdevs. Therefore all unencrypted data can still be read.

Browsing a zpool History

Sometimes it is extremely useful to get the list of what happened in a zpool life and here is where zpool history comes at rescue. As zpool_test is brand new and so has nothing really interesting to show, let's take an example of what is going on with a real zpool used in production on workstation recently restored from remote TrueNAS backups:

root #zpool history rpool

History for 'rpool': 2023-05-02.19:24:36 zpool create rpool raidz /dev/nvme0n1 /dev/nvme1n1 /dev/nvme2n1 2023-05-02.19:27:32 zfs create -o mountpoint=none rpool/ROOT 2023-05-02.19:27:36 zfs create -o mountpoint=none rpool/HOME 2023-05-02.19:33:59 zfs receive -vu rpool/ROOT/gentoo-gcc13-avx512 2023-05-02.19:39:57 zfs receive -vu rpool/HOME/user111 2023-05-02.20:05:52 zpool set autotrim=on rpool 2023-05-02.20:08:12 zfs destroy -r rpool/HOME/user111 2023-05-02.20:33:27 zfs receive -vu rpool/HOME/user1 2023-05-02.20:35:12 zpool import -a 2023-05-02.20:58:19 zpool scrub rpool 2023-05-02.21:22:25 zpool import -N rpool 2023-05-02.21:23:18 zpool set cachefile=/etc/zfs/zpool.cache rpool 2023-05-03.07:02:25 zfs snap rpool/ROOT/gentoo-gcc13-avx512@20230503 2023-05-03.07:02:36 zfs snap rpool/HOME/user1@20230503 2023-05-03.07:04:16 zfs send -I rpool/HOME/user1@20230430 rpool/HOME/user1@20230502 2023-05-07.16:10:15 zpool import -N -c /etc/zfs/zpool.cache rpool (...)

It is possible to have the same information in full-format (who/what/where/when):

root #zpool history -l rpool

History for 'rpool': 2023-05-02.19:24:36 zpool create rpool raidz /dev/nvme0n1 /dev/nvme1n1 /dev/nvme2n1 [user 0 (root) on livecd:linux] 2023-05-02.19:27:32 zfs create -o mountpoint=none rpool/ROOT [user 0 (root) on livecd:linux] 2023-05-02.19:27:36 zfs create -o mountpoint=none rpool/HOME [user 0 (root) on livecd:linux] 2023-05-02.19:33:59 zfs receive -vu rpool/ROOT/gentoo-gcc13-avx512 [user 0 (root) on livecd:linux] 2023-05-02.19:39:57 zfs receive -vu rpool/HOME/user111[user 0 (root) on livecd:linux] (...) 2023-05-02.20:35:12 zpool import -a [user 0 (root) on wks.myhomenet.lan:linux]

Note that:

- The zpool as been created using a Linux live media environment (in that case the Gentoo LiveCD) and that environment had set

livecd has being the hostname at that time

- Two placeholders (non-mountable) datasets named

rpool/ROOTandrpool/HOMEhave been created by the userrootunder that live media environment - The dataset

rpool/ROOT/gentoo-gcc13-avx512(obviously the Gentoo system) has been restored from a remote snapshot by the userrootunder that live media environment - The dataset

rpool/HOME/user111(obviously a user home directory) has been restored from a remote snapshot by the userrootunder that live media environment - At some point, the workstation rebooted under the restored Gentoo environment (notice the hostname change for

wks.myhomenet.lan) that imported the zpool - and so on.

Zpool tips/tricks

- It is sometimes not possible to shrink a zpool after initial creation - if the pool has no raidz vdevs and the pool has all vdevs of the same ashift, the "device removal" feature in 0.8 and above can be used. There are performance implications to doing this, however, so always be careful when creating pools or adding vdevs/disks!

- It is possible to add more disks to a MIRROR after its initial creation. Use the following command (/dev/loop0 is the first drive in the MIRROR):

root #zpool attach zfs_test /dev/loop0 /dev/loop2- Sometimes, a wider RAIDZ vdev can be less suitable than two (or more) smaller RAIDZ vdevs. Try testing the intended use before settling on one and moving all data entirely.

- RAIDZ vdevs currently can not be resized after initial creation. Only additional hot spares may be added. However, the hard drives can be replaced with bigger ones (one at a time), e.g. replace 1T drives with 2T drives to double the available space in the zpool.

- It is possible to mix MIRROR and RAIDZ vdevs in a zpool. For example to add two more disks as a MIRROR vdev in a zpool with a RAIDZ1 vdev named zfs_test, use:

root #zpool add -f zfs_test mirror /dev/loop4 /dev/loop5- Warning

There is probably no good reason to do this. Perhaps a special vdev or log vdev would be a reasonable time, but this generally winds up with the worst performance characteristics of both. - Note

Adding a vdev that does not match requires the -f option

Zpools coming from other architectures

It is possible to import zpools coming from other platforms even of a different bit ordering as long as:

- the version is 28 (legacy) or earlier

- The ZFS implementation used support at least the features indicated by the zpools in the case of a zpool at version 5000.

In the following example, a zpool named oldpool had been created on an emulated FreeBSD 10/SPARC64 environment emulated with app-emulation/qemu. The zpool had been created with legacy version 28 (zpool create -o version=28) which is basically what a SPARC64 machine running Solaris 10 would also have done:

root #zpool import

pool: oldpool

id: 11727962949360948576

state: ONLINE

status: The pool is formatted using a legacy on-disk version.

action: The pool can be imported using its name or numeric identifier, though

some features will not be available without an explicit 'zpool upgrade'.

config:

oldpool ONLINE

raidz1-0 ONLINE

/dev/loop0 ONLINE

/dev/loop1 ONLINE

/dev/loop2 ONLINE

root #zpool import -f oldpool

root #zpool status oldpool

pool: oldpool

state: ONLINE

status: The pool is formatted using a legacy on-disk format. The pool can

still be used, but some features are unavailable.

action: Upgrade the pool using 'zpool upgrade'. Once this is done, the

pool will no longer be accessible on software that does not support

feature flags.

config:

NAME STATE READ WRITE CKSUM

oldpool ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

/dev/loop0 ONLINE 0 0 0

/dev/loop1 ONLINE 0 0 0

/dev/loop2 ONLINE 0 0 0

errors: No known data errors

Notice the values of all feature@ (all are disabled) and version (28) properties:

root #zpool get all oldpool

NAME PROPERTY VALUE SOURCE oldpool size 11.9G - oldpool capacity 0% - oldpool altroot - default oldpool health ONLINE - oldpool guid 11727962949360948576 - oldpool version 28 local oldpool bootfs - default oldpool delegation on default oldpool autoreplace off default oldpool cachefile - default oldpool failmode wait default oldpool listsnapshots off default oldpool autoexpand off default oldpool dedupratio 1.00x - oldpool free 11.9G - oldpool allocated 88.8M - oldpool readonly off - oldpool ashift 0 default oldpool comment - default oldpool expandsize - - oldpool freeing 0 - oldpool fragmentation - - oldpool leaked 0 - oldpool multihost off default oldpool checkpoint - - oldpool load_guid 11399183722859374527 - oldpool autotrim off default oldpool compatibility off default oldpool feature@async_destroy disabled local oldpool feature@empty_bpobj disabled local oldpool feature@lz4_compress disabled local oldpool feature@multi_vdev_crash_dump disabled local oldpool feature@spacemap_histogram disabled local oldpool feature@enabled_txg disabled local oldpool feature@hole_birth disabled local oldpool feature@extensible_dataset disabled local oldpool feature@embedded_data disabled local oldpool feature@bookmarks disabled local oldpool feature@filesystem_limits disabled local oldpool feature@large_blocks disabled local oldpool feature@large_dnode disabled local oldpool feature@sha512 disabled local oldpool feature@skein disabled local oldpool feature@edonr disabled local oldpool feature@userobj_accounting disabled local oldpool feature@encryption disabled local oldpool feature@project_quota disabled local oldpool feature@device_removal disabled local oldpool feature@obsolete_counts disabled local oldpool feature@zpool_checkpoint disabled local oldpool feature@spacemap_v2 disabled local oldpool feature@allocation_classes disabled local oldpool feature@resilver_defer disabled local oldpool feature@bookmark_v2 disabled local oldpool feature@redaction_bookmarks disabled local oldpool feature@redacted_datasets disabled local oldpool feature@bookmark_written disabled local oldpool feature@log_spacemap disabled local oldpool feature@livelist disabled local oldpool feature@device_rebuild disabled local oldpool feature@zstd_compress disabled local oldpool feature@draid disabled local

FreeBSD versions 9, 10,11 and 12 uses the ZFS implementation coming from Illumos/OpenSolaris. FreeBSD 13 uses OpenZFS 2.x.

To have this zpool being able to use the very latest features of OpenZFS/ZFS or the ZFS implementation used on the system, upgrade the zpool like explained in the above sections:

root #zpool upgrade oldpool

This system supports ZFS pool feature flags. Successfully upgraded 'oldpool' from version 28 to feature flags. Enabled the following features on 'oldpool': async_destroy empty_bpobj lz4_compress multi_vdev_crash_dump spacemap_histogram enabled_txg hole_birth extensible_dataset embedded_data bookmarks filesystem_limits large_blocks large_dnode sha512 skein edonr userobj_accounting encryption project_quota device_removal obsolete_counts zpool_checkpoint spacemap_v2 allocation_classes resilver_defer bookmark_v2 redaction_bookmarks redacted_datasets bookmark_written log_spacemap livelist device_rebuild zstd_compress draid

As a test, scrub the pool:

root #zpool scrub oldpool

root #zpool status oldpool

pool: oldpool

state: ONLINE

scan: scrub repaired 0B in 00:00:00 with 0 errors on Sun May 7 16:35:57 2023

config:

NAME STATE READ WRITE CKSUM

oldpool ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

/dev/loop0 ONLINE 0 0 0

/dev/loop1 ONLINE 0 0 0

/dev/loop2 ONLINE 0 0 0

errors: No known data errors

What about the contained data?

root #tar -tvf /oldpool/boot-fbsd10-sparc64.tar

drwxr-xr-x root/wheel 0 2017-09-29 04:09 boot/ -r--r--r-- root/wheel 3557 2017-09-29 04:09 boot/beastie.4th -r--r--r-- root/wheel 8192 2017-09-29 04:09 boot/boot1 -r--r--r-- root/wheel 2809 2017-09-29 04:09 boot/brand.4th -r--r--r-- root/wheel 2129 2017-09-29 04:09 boot/brand-fbsd.4th -r--r--r-- root/wheel 6208 2017-09-29 04:09 boot/check-password.4th -r--r--r-- root/wheel 1870 2017-09-29 04:09 boot/color.4th drwxr-xr-x root/wheel 0 2017-09-29 04:09 boot/defaults/ -r--r--r-- root/wheel 4056 2017-09-29 04:09 boot/delay.4th -r--r--r-- root/wheel 89 2017-09-29 04:09 boot/device.hints drwxr-xr-x root/wheel 0 2017-09-29 04:09 boot/dtb/ drwxr-xr-x root/wheel 0 2017-09-29 04:09 boot/firmware/ -r--r--r-- root/wheel 4179 2017-09-29 04:09 boot/frames.4th drwxr-xr-x root/wheel 0 2017-09-29 04:09 boot/kernel/ -r--r--r-- root/wheel 6821 2017-09-29 04:09 boot/loader.4th -r-xr-xr-x root/wheel 211768 2017-09-29 04:09 boot/loader (...)

A zpool is perfectly importable even if coming for a totally different world (SPARC64 is a MSB bit-ordering architecture whereas ARM/X86_64 are LSB bit-ordering architectures). To clean everything up (as created in the second part of this section hereafter):

root #zpool export oldpool

root #losetup -D

root #rm /tmp/fbsd-sparc64-ada{0,1,2}.raw

Now the zpool has been upgraded, what happens if an import is attempted on the older FreeBSD 10/SPARC64 environment?

root #zpool import -o altroot=/tmp -f oldpoolThis pool uses the following feature(s) not supported by this system:

com.delphix:spacemap_v2 (Space maps representing large segments are more efficient.)

com.delphix:log_spacemap (Log metaslab changes on a single spacemap and flush them periodically.)

All unsupported features are only required for writing to the pool.

The pool can be imported using '-o readonly=on'.root #zpool import -o readonly=on -o altroot=/tmp -f oldpoolNAME SIZE ALLOC FREE EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT oldpool 11.9G 88.7M 11.9G - 0% 0% 1.00x ONLINE /tmp

The system sees it but refuses to import it a read/write state. Issuing zpool status or zpool list command will not show if the zpool but a zpool get all will, not obviously, give a hint:

root #zpool get allNAME PROPERTY VALUE SOURCE oldpool size 11.9G - oldpool capacity 0% - oldpool altroot /tmp local oldpool health ONLINE - oldpool guid 11727962949360948576 default oldpool version - default oldpool bootfs - default oldpool delegation on default oldpool autoreplace off default oldpool cachefile none local oldpool failmode wait default oldpool listsnapshots off default oldpool autoexpand off default oldpool dedupditto 0 default oldpool dedupratio 1.00x - oldpool free 11.9G - oldpool allocated 88.7M - oldpool readonly on - oldpool comment - default oldpool expandsize - - oldpool freeing 0 default oldpool fragmentation 0% - oldpool leaked 0 default oldpool feature@async_destroy enabled local oldpool feature@empty_bpobj enabled local oldpool feature@lz4_compress active local oldpool feature@multi_vdev_crash_dump enabled local oldpool feature@spacemap_histogram active local oldpool feature@enabled_txg active local (...) oldpool unsupported@com.delphix:zpool_checkpoint inactive local oldpool unsupported@com.delphix:spacemap_v2 readonly local oldpool unsupported@com.delphix:log_spacemap readonly local (...)

Notice the value of properties unsupported@com.delphix:spacemap_v2 and unsupported@com.delphix:log_spacemap. Also notice that all unsupported features have names starting with unsupported@.

For the completeness here is what happens when an attempt to import a totally incompatible zpool. The following example shows what happens when a Linux system (OpenZFS 2.1.x) attempts to import a zpool created under Solaris 11.4 (Solaris still uses the legacy version scheme and tagged the zpool at version 42).

root #zpool import -f rpool

cannot import 'rpool': pool is formatted using an unsupported ZFS version

In that case there is a life jacket: although the zpool uses an unknown zpool version to OpenZFS, the ZFS version reported by both Solaris 11.4 and OpenZFS 2.1.x is 5 (not to be confused the zpool version) so the datasets on the zpool can be "zfs sent" somewhere from the Solaris environment, then "zfs received" in the Linux environment.

For the sake of reproducibility the rest of this section details the followed steps to create the zpool

oldpool from a FreeBSD/SPARC64 environment. At this point the rest of this section can be skipped if that process is of no interest.To create the zpool oldpool used above:

- Install app-emulation/qemu (QEMU 8.0 was used) ensuring the variable QEMU_SOFTMMU_TARGETS contains at least

sparc64build the "full" SPARC64 system emulator:root #emerge --ask app-emulation/qemu - Download a FreeBSD 10/SPARC64 DVD ISO image from one of the mirrors (FreeBSD 9 crashes at boot under QEMU, so FreeBSD 10 is the minimum knowing that FreeBSD 12 has some issues while loading the required modules)

- Create some images (here three of 4G each) for the future vdevs: for i in {0..2}; do qemu-img create -f raw /tmp/fbsd-sparc64-ada${i}.raw 4G; done;

- Fire QEmu up (using

-nographicto not have FreeBSD hangs, also Sun4u machines have 2 IDE channels so 3 hard drives + 1 ATAPI CD/DVD ROM drive):

root #qemu-system-sparc64 -nographic -m 2G -drive format=raw,file=/tmp/fbsd-sparc64-ada0.raw,media=disk,index=0 -drive format=raw,file=/tmp/fbsd-sparc64-ada1.raw,media=disk,index=1 -drive format=raw,file=/tmp/fbsd-sparc64-ada2.raw,media=disk,index=2 -drive format=raw,file=/tmp/FreeBSD-10.4-RELEASE-sparc64-dvd1.iso,media=cdrom,readonly=on

OpenBIOS for Sparc64 Configuration device id QEMU version 1 machine id 0 kernel cmdline CPUs: 1 x SUNW,UltraSPARC-IIi UUID: 00000000-0000-0000-0000-000000000000 Welcome to OpenBIOS v1.1 built on Mar 7 2023 22:22 Type 'help' for detailed information Trying disk:a... No valid state has been set by load or init-program 0 >

- At the OpenBIOS prompt

0 >enter: boot cdrom:f - Now FreeBSD should boot, as emulation is slow it can take awhile, let the boot process complete and check if all the virtual disks (adaX) are mentioned:

Not a bootable ELF image

Loading a.out image...

Loaded 7680 bytes

entry point is 0x4000

>> FreeBSD/sparc64 boot block

Boot path: /pci@1fe,0/pci@1,1/ide@3/ide@1/cdrom@1:f

Boot loader: /boot/loader

Consoles: Open Firmware console

FreeBSD/sparc64 bootstrap loader, Revision 1.0

(root@releng1.nyi.freebsd.org, Fri Sep 29 07:57:55 UTC 2017)

bootpath="/pci@1fe,0/pci@1,1/ide@3/ide@1/cdrom@1:a"

Loading /boot/defaults/loader.conf

/boot/kernel/kernel data=0xc25140+0xe62a0 syms=[0x8+0xcd2c0+0x8+0xbf738]

Hit [Enter] to boot immediately, or any other key for command prompt.

Booting [/boot/kernel/kernel]...

jumping to kernel entry at 0xc00a8000.

Copyright (c) 1992-2017 The FreeBSD Project.

Copyright (c) 1979, 1980, 1983, 1986, 1988, 1989, 1991, 1992, 1993, 1994

The Regents of the University of California. All rights reserved.

FreeBSD is a registered trademark of The FreeBSD Foundation.

FreeBSD 10.4-RELEASE #0 r324094: Fri Sep 29 08:00:33 UTC 2017

root@releng1.nyi.freebsd.org:/usr/obj/sparc64.sparc64/usr/src/sys/GENERIC sparc64

(...)

ada0 at ata2 bus 0 scbus0 target 0 lun 0

ada0: <QEMU HARDDISK 2.5+> ATA-7 device

ada0: Serial Number QM00001

ada0: 33.300MB/s transfers (UDMA2, PIO 8192bytes)

ada0: 4096MB (8388608 512 byte sectors)

ada0: Previously was known as ad0

cd0 at ata3 bus 0 scbus1 target 1 lun 0

cd0: <QEMU QEMU DVD-ROM 2.5+> Removable CD-ROM SCSI device

cd0: Serial Number QM00004

cd0: 33.300MB/s transfers (UDMA2, ATAPI 12bytes, PIO 65534bytes)

cd0: 2673MB (1368640 2048 byte sectors)

ada1 at ata2 bus 0 scbus0 target 1 lun 0

ada1: <QEMU HARDDISK 2.5+> ATA-7 device

ada1: Serial Number QM00002

ada1: 33.300MB/s transfers (UDMA2, PIO 8192bytes)

ada1: 4096MB (8388608 512 byte sectors)

ada1: Previously was known as ad1

ada2 at ata3 bus 0 scbus1 target 0 lun 0

ada2: <QEMU HARDDISK 2.5+> ATA-7 device

ada2: Serial Number QM00003

ada2: 33.300MB/s transfers (UDMA2, PIO 8192bytes)

ada2: 4096MB (8388608 512 byte sectors)

ada2: Previously was known as ad2

(...)

Console type [vt100]:

- When the kernel has finished to boot, FreeBSD asks the console type, just press ENTER to accept the default choice (VT100)

- The FreeBSD installer is spawned, use the TAB key twice to highlight

LiveCDand press the ENTER key to exit the FreeBSD installer and reach a login prompt - at the login prompt, enter root (no password) et voila! a BASH shell prompt

- Issue the command kldload opensolaris THEN kldload zfs (some warnings can be ignored as kldstat will show what was loaded)

root@:~ # kldload opensolaris

root@:~ # kldload zfs

ZFS NOTICE: Prefetch is disabled by default if less than 4GB of RAM is present;

to enable, add "vfs.zfs.prefetch_disable=0" to /boot/loader.conf.

ZFS filesystem version: 5

ZFS storage pool version: features support (5000)

root@:~ # kldstat

Id Refs Address Size Name

1 10 0xc0000000 e97de8 kernel

2 2 0xc186e000 106000 opensolaris.ko

3 1 0xc1974000 3f4000 zfs.ko

- Create a zpool with a legacy version scheme (version at 28) named

oldpoolby issuing zpool create -o altroot=/tmp -o version=28 oldpool raidz /dev/ada0 /dev/ada1 /dev/ada2 - Checking the zpool status should report

oldpoolas beingONLINE(ZFS should also complain that the legacy format is used, don't upgrade yet!):

root@:~ # zpool status

pool: oldpool

state: ONLINE

status: The pool is formatted using a legacy on-disk format. The pool can

still be used, but some features are unavailable.

action: Upgrade the pool using 'zpool upgrade'. Once this is done, the

pool will no longer be accessible on software that does not support feature

flags.

scan: none requested

config:

NAME STATE READ WRITE CKSUM

oldpool ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

ada0 ONLINE 0 0 0

ada1 ONLINE 0 0 0

ada2 ONLINE 0 0 0

errors: No known data errors

- As the zpool has been (temporarily) mounted under /tmp/oldpool, copy some data on it by issuing a command like tar cvf /tmp/oldpool/boot-fbsd10-sparc64.tar /boot

- Export the zpool: zpool export oldpool

- Press Ctrl+A X to terminate QEMU (no need of a graceful shutdown, this is a live environment) and return to the Gentoo host shell

- The last step is to map some loop devices to the raw images:

root #for i in {0..2}; do losetup -f /tmp/fbsd-sparc64-ada${i}.raw; done;

root #losetup -l

NAME SIZELIMIT OFFSET AUTOCLEAR RO BACK-FILE DIO LOG-SEC /dev/loop1 0 0 0 0 /tmp/fbsd-sparc64-ada1.raw 0 512 /dev/loop2 0 0 0 0 /tmp/fbsd-sparc64-ada2.raw 0 512 /dev/loop0 0 0 0 0 /tmp/fbsd-sparc64-ada0.raw 0 512

ZFS datasets

Concepts

Just like /usr/sbin/zpool is used to manage zpools, the second ZFS tool is /usr/sbin/zfs which used for any operation regarding datasets. It is extremely important to understand several concepts detailed in zfsconcepts(7) and zfs(8).

- datasets are named logical data containers managed as a single entity by the /usr/sbin/zfs command. A dataset has a unique name (path) within a given zpool and can be either a filesystem, a snapshot, a bookmark or a zvolume.

- filesystems are POSIX filesystems that can be mounted in the system VFS and appears, just like an ext4 or a XFS filesystem, as a set of files and directories. An extended form of POSIX ACLs (NFSv4 ACLs) and extended attributes are supported.

Moving files between filesystem datasets even located within the same zpool is a "cp-then-rm" operation, not just an instant move.

- snapshots are frozen, read-only point-in-time states of what a filesystem or a zvolume contains. ZFS being a copy-on-write beast, a snapshot only retains what has been changed since the previous snapshot and as such, are approximately free to take if nothing has changed. Unlike LVM snapshots, ZFS snapshots do not require space reservation and can be of an unlimited number. A snapshot can be browsed via a "magic path" to retrieve a filesystem or a zvolume contents as it was at the time it had been taken or used to rollback the whole filesystem or zvolume it related to and are named: zpoolname/datasetname@snapshotname.

Important things to note about snapshots:

- Always back up to an external system! If the zpool becomes corrupt/unavailable or the dataset is destroyed, the data is gone forever.

- Snapshots are not transactional so any on going writes are not guaranteed to be stored in full in the snapshot (e.g. if 15GB of a 40GB file have been written at the time the snapshot is taken, the snapshot will held a "photo" of those 15GB only).

- The first snapshot of a zvolume may incur unexpected space implications if a reservation is set on the zvolume as it will then reserve enough space to overwrite the entire volume once on top of any space allocated already for the zvolume.

- bookmarks are like snapshots but with no data held and simply store point in time references. This is extremely useful for doing incremental transfers to a backup system without keeping all the previous snapshots of a given dataset on the source system. Just like a bookmark left in a page of a book. Another use case of bookmarks is to make redacted datasets named: zpoolname/datasetname#bookmarkname

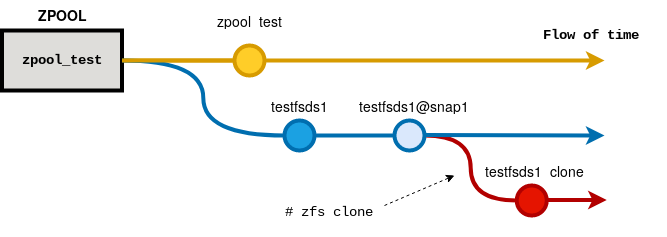

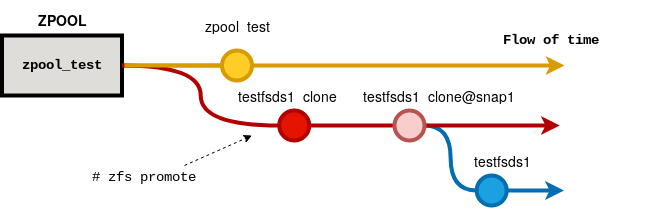

- clones are a writable "views" of snapshots that can be mounted in the system VFS just like a filesystem can. The catch is introducing a parent-child dependency with the filesystem. The consequence is that once a clone has been created, the original dataset which can no longer be destroyed as long as the clone exists. That parent-child relationship can be reversed by promoting the clone: the clone becomes the parent and the original filesystem becomes the child (this also impacts the original filesystem snapshots which are also "reversed").

- zvolumes are emulated block devices usable just like any other physical hard drive as special block device entries under the directory /dev/zvol/zpoolname.

Filesystems datasets

Whenever a zpool is created, a first filesystem dataset with the same name as the zpool is also created. This root dataset can never be destroyed, unless destroying the zpool itself. All subsequently created datasets are uniquely identified by "path" and are always situated somewhere under this root dataset. Somewhere because subsequently created filesystems datasets can be nested and so are forming a hierarchy.

To put an illustration on the explanation, let's (re)create the zpool zpool_test:

root #zpool create zpool_test raidz /dev/ram0 /dev/ram1 /dev/ram2 /dev/ram3

root #zfs list

NAME USED AVAIL REFER MOUNTPOINT zpool_test 541K 5.68T 140K /zpool_test

It can be observed that name given to the root dataset (column NAME) is zpool_test which is the same name as the zpool). As this dataset has a mountpoint defined (mentioned in the column MOUNTPOINT) this one is a filesystem dataset. Let's check that:

root #mount root # grep zpool_test

zpool_test on /zpool_test type zfs (rw,xattr,noacl)

Notice the filesystem type reported as being

zfs meaning it is a filesystem dataset.What does it contains?

root #ls -la /zpool_test

total 33 drwxr-xr-x 2 root root 2 May 8 19:15 . drwxr-xr-x 24 root root 26 May 8 19:15 ..

Nothing! Now, create additional filesystem datasets by issuing the commands below (notice the "paths" are specified without a leading slash):

root #zfs create zpool_test/fds1

root #zfs create zpool_test/fds1/fds1_1

root #zfs create zpool_test/fds1/fds1_1/fds1_1_1

root #zfs create zpool_test/fds1/fds1_1/fds1_1_2

root #zfs create zpool_test/fds2

root #zfs create zpool_test/fds2/fds2_1The result of those latter operations being:

root #zfs list

NAME USED AVAIL REFER MOUNTPOINT zpool_test 2.44M 5.68T 947K /zpool_test zpool_test/fds1 418K 5.68T 140K /zpool_test/fds1 zpool_test/fds1/fds1_1 279K 5.68T 140K /zpool_test/fds1/fds1_1 zpool_test/fds1/fds1_1/fds1_1_1 140K 5.68T 140K /zpool_test/fds1/fds1_1/fds1_1_1 zpool_test/fds1/fds1_1/fds1_1_2 140K 5.68T 140K /zpool_test/fds1/fds1_1/fds1_1_2 zpool_test/fds2 279K 5.68T 140K /zpool_test/fds2 zpool_test/fds2/fds2_1 140K 5.68T 140K /zpool_test/fds2/fds2_1

Interestingly, the mountpoints hierarchy, by default, is similar the very same hierarchy the filesystem datasets have. The mountpoints can be customized for something else at convenience if needed and can even follow a totally different schema. The filesystem datasets hierarchy can be altered by renaming (zfs rename). For example, if zpool_test/fds1/fds1_1/fds_1/fds1_1_2 should now be zpool_test/fds3:

root #zfs rename zpool_test/fds1/fds1_1/fds1_1_2 zpool_test/fds3

root #tree /zpool_test

/zpool_test ├── fds1 │ └── fds1_1 │ └── fds1_1_1 ├── fds2 │ └── fds2_1 └── fds3 7 directories, 0 files

A zfs list giving:

root #zfs list

NAME USED AVAIL REFER MOUNTPOINT zpool_test 1.71M 5.68T 151K /zpool_test zpool_test/fds1 430K 5.68T 140K /zpool_test/fds1 zpool_test/fds1/fds1_1 291K 5.68T 151K /zpool_test/fds1/fds1_1 zpool_test/fds1/fds1_1/fds1_1_1 140K 5.68T 140K /zpool_test/fds1/fds1_1/fds1_1_1 zpool_test/fds2 279K 5.68T 140K /zpool_test/fds2 zpool_test/fds2/fds2_1 140K 5.68T 140K /zpool_test/fds2/fds2_1 zpool_test/fds3 140K 5.68T 140K /zpool_test/fds3

ZFS has also changed the mountpoint in consquence.

Better: rename a filesystem dataset with children under it like zpool_test/fds2 which becomes zpool_test/fds3_1:

root #zfs rename zpool_test/fds2 zpool_test/fds3/fds3_1

root #zfs list